About

The Brown-UMBC Reinforcement Learning and Planning (BURLAP) java code library is for the use and development of single or multi-agent planning and learning algorithms and domains to accompany them. BURLAP uses a highly flexible system for defining states and and actions of nearly any kind of form, supporting discrete continuous, and relational domains. Planning and learning algorithms range from classic forward search planning to value function-based stochastic planning and learning algorithms. Also included is a set of analysis tools such as a common framework for the visualization of domains and agent performance in various domains.

BURLAP is licensed under the permissive Apache 2.0 license.

For more background information on the project and the people involved, see the Information page.

Where to git it

BURALP uses Maven and is available on Maven Central! That means that if you'd like to create a project that uses BURLAP, all you need to do is add the following dependency to the <dependencies> section of your projects pom.xml

You can also get the full BURLAP source to manually compile/modify from Github at:

https://github.com/jmacglashan/burlap

Alternatively, you can directly download precompiled jars from Maven Central from here. Use the jar-with-dependencies if you want all dependencies included.

Prior versions of BURLAP are also available on Maven Central, and branches on github.

Tutorials and Example Code

Short video tutorials, longer text tutorials, and example code are available for BURLAP. All code can be found in our examples repository, which also provides the kind of POM file and file sturcture you should consider using for a BURLAP project. The example repository can be found at:

https://github.com/jmacglashan/burlap_examples/

Video Tutorials

Written Tutorials

- Hello GridWorld! - a tutorial on acquiring and linking with BURLAP

- Building a Domain

- Using Basic Planning and Learning Algorithms

- Creating a Planning and Learning Algorithm

- Solving Continuous Domains

- Building an OO-MDP Domain

Documentation

Java documentation is provided for all of the source code in BURLAP. You can find an online copy of the javadoc at the below location.

http://burlap.cs.brown.edu/doc/index.html

Features

Current

- A highly felixible state representation in which you define states with regular Java code and only need to implement a short interface. This enables support for discrete, continuous, relational, or any other kind of state representation that you can code! BURLAP also has optional interfaces to provide first class support for the rich OO-MDP state representation [1].

-

Supported problem formalisms

- Markov Decision Processes (single agent)

- Stochastic Games (multi-agent)

- Partially Observable Markov Decision Processes (single agent)

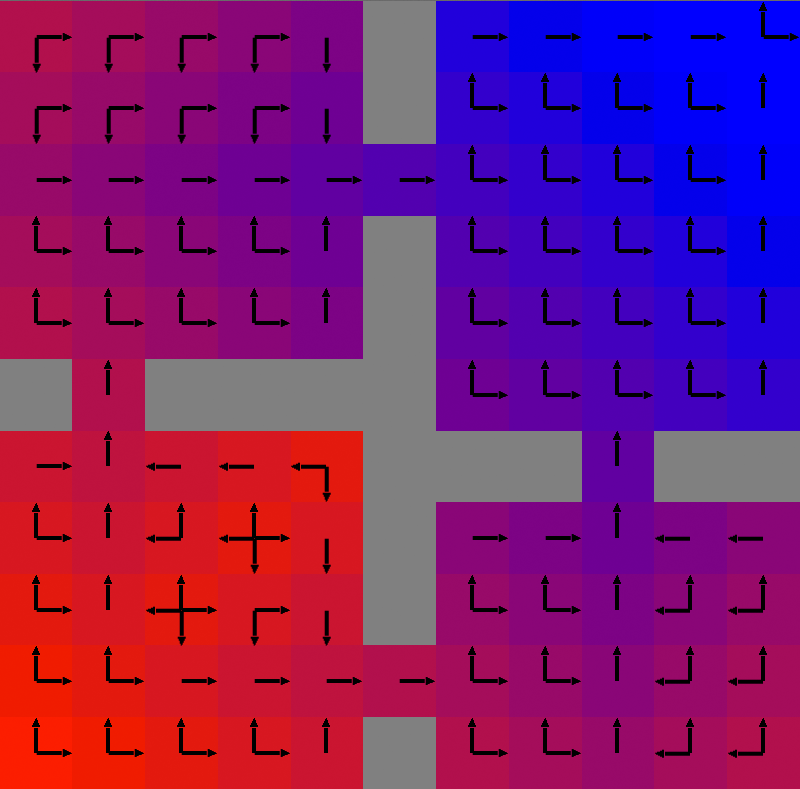

- Tools for visualizing and defining visualizations of states, episodes, value functions, and policies.

- Tools for setting up experiments with multiple learning algorithms and plotting the performance using multiple performance metrics.

- An extendable shell framework for controlling experiments at runtime.

- Tools for creating multi-agent tournaments.

-

Classic goal-directed deterministic forward-search planning.

- Breadth-first Search

- Depth-first Search

- A*

- IDA*

- Statically Weighted A* [2]

- Dynamically Weighted A* [3]

-

Stochastic Planning.

- Value Iteration [4]

- Policy Iteration

- Prioritized Sweeping [20]

- Real-time Dynamic Programming [5]

- UCT [6]

- Sparse Sampling [17]

- Bounded Real-time Dynamic Programming [21]

-

Learning.

- Q-learning [7]

- SARSA(λ) [8]

- Actor Critic [9]

- Potential Shaped RMax [12]

- ARTDP [5]

-

Value Function Approximation

- Gradient Descent SARSA(λ) [8]

- Least-Squares Policy Iteration [18]

- Fitted Value Iteration [24]

- Framework for implementing linear and non-linear VFA

- CMACs/Tile Coding [10]

- Radial Basis Functions

- Fourier Basis Functions [19]

- The Options framework [11] (supported in most single agent planning and learning algorithms).

- Reward Shaping

- Inverse Reinforcement Learning

- Maximum Margin Apprenticeship Learning [16]

- Multiple Intentions Maximum-likelihood Inverse Reinforcement Learning [22]

- Receding Horizon Inverse Reinforcement Learning [23]

- Multi-agent Q-learning and Value Iteration, supporting

- Q-learning with an n-step action history memory

- Friend-Q [13]

- Foe-Q [13]

- Correlated-Q [14]

- Coco-Q [15]

- Single-agent partially observable planning algorithms

- Finite horizon optimal tree search

- QMDP [25]

- Belief MDP conversion for use with standard MDP algorithms

-

Pre-made domains and domain generators.

- Grid Worlds

- Domains represented as graphs

- Blocks World

- Lunar Lander

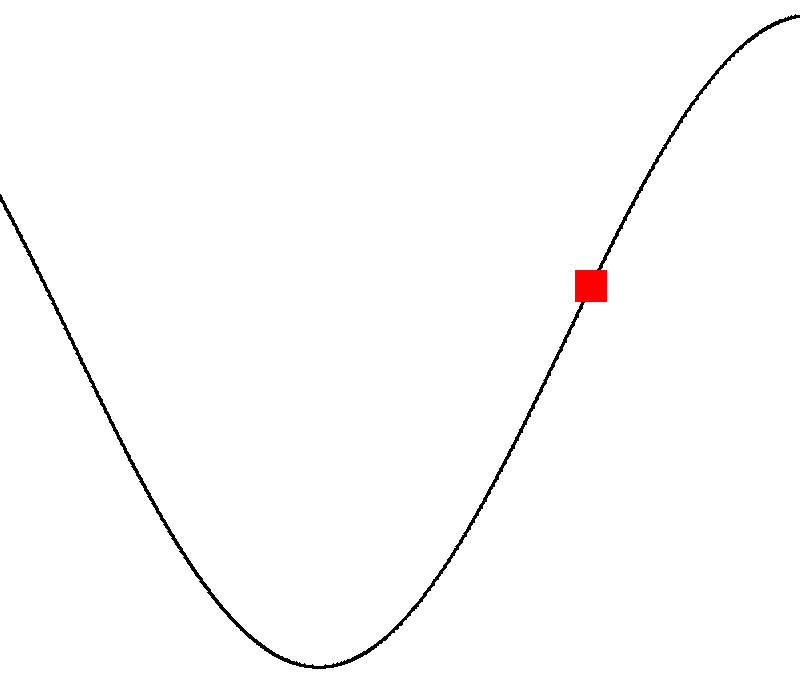

- Mountain Car

- Cart Pole

- Frostbite

- Blockdude

- Grid Games (a multi-agent stochastic games domain)

- Multiple classic Bimatrix games.

- RLGlue agent and environment interfacing

- Extensions for controlling ROS-powered robots

- Extensions for controlling Minecraft

Features in development

- Learning from human feedback algorithms

- POMDP algorithms like POMCP and PBVI

- General growth of all other algorithm classes already included

References

- Diuk, C., Cohen, A., and Littman, M.L.. "An object-oriented representation for efficient reinforcement learning." Proceedings of the 25th international conference on Machine learning (2008). 240-270.

- Pohl, Ira. "First results on the effect of error in heuristic search". Machine Intelligence 5 (1970): 219-236.

- Pohl, Ira. "The avoidance of (relative) catastrophe, heuristic competence, genuine dynamic weighting and computational issues in heuristic problem solving (August, 1973)

- Puterman, Martin L., and Moon Chirl Shin. "Modified policy iteration algorithms for discounted Markov decision problems." Management Science 24.11 (1978): 1127-1137.

- Barto, Andrew G., Steven J. Bradtke, and Satinder P. Singh. "Learning to act using real-time dynamic programming." Artificial Intelligence 72.1 (1995): 81-138.

- Kocsis, Levente, and Csaba Szepesvari. "Bandit based monte-carlo planning." ECML (2006). 282-293.

- Watkins, Christopher JCH, and Peter Dayan. "Q-learning." Machine learning 8.3-4 (1992): 279-292.

- Rummery, Gavin A., and Mahesan Niranjan. On-line Q-learning using connectionist systems. University of Cambridge, Department of Engineering, 1994.

- Barto, Andrew G., Richard S. Sutton, and Charles W. Anderson. "Neuronlike adaptive elements that can solve difficult learning control problems." Systems, Man and Cybernetics, IEEE Transactions on 5 (1983): 834-846.

- Albus, James S. "A theory of cerebellar function." Mathematical Biosciences 10.1 (1971): 25-61.

- Sutton, Richard S., Doina Precup, and Satinder Singh. "Between MDPs and semi-MDPs: A framework for temporal abstraction in reinforcement learning." Artificial intelligence 112.1 (1999): 181-211.

- Asmuth, John, Michael L. Littman, and Robert Zinkov. "Potential-based Shaping in Model-based Reinforcement Learning." AAAI. 2008.

- Littman, Michael L. "Markov games as a framework for multi-agent reinforcement learning." ICML. Vol. 94. 1994.

- Greenwald, Amy, Keith Hall, and Roberto Serrano. "Correlated Q-learning." ICML. Vol. 3. 2003.

- Sodomka, Eric, Hilliard, E., Littman, M., & Greenwald, A. "Coco-Q: Learning in Stochastic Games with Side Payments." Proceedings of the 30th International Conference on Machine Learning (ICML-13). 2013.

- Abbeel, Pieter, and Andrew Y. Ng. "Apprenticeship learning via inverse reinforcement learning." Proceedings of the twenty-first international conference on Machine learning. ACM, 2004.

- Kearns, Michael, Yishay Mansour, and Andrew Y. Ng. "A sparse sampling algorithm for near-optimal planning in large Markov decision processes." Machine Learning 49.2-3 (2002): 193-208.

- Lagoudakis, Michail G., and Ronald Parr. "Least-squares policy iteration." The Journal of Machine Learning Research 4 (2003): 1107-1149

- G.D. Konidaris, S. Osentoski and P.S. Thomas. Value Function Approximation in Reinforcement Learning using the Fourier Basis. In Proceedings of the Twenty-Fifth Conference on Artificial Intelligence, pages 380-385, August 2011.

- Li, Lihong, Michael L. Littman, and L. Littman. Prioritized sweeping converges to the optimal value function. Tech. Rep. DCS-TR-631, 2008.

- McMahan, H. Brendan, Maxim Likhachev, and Geoffrey J. Gordon. "Bounded real-time dynamic programming: RTDP with monotone upper bounds and performance guarantees." Proceedings of the 22nd international conference on Machine learning. ACM, 2005.

- Babes, Monica, et al. "Apprenticeship learning about multiple intentions." Proceedings of the 28th International Conference on Machine Learning (ICML-11). 2011.

- MacGlashan, James and Littman, Micahel, "Between imitation and intention learning," in Proceedings of the International Joint Conference on Artificial Intelligence, 2015.

- Gordon, Geoffrey J. "Stable function approximation in dynamic programming." Proceedings of the twelfth international conference on machine learning. 1995.

- Littman, M.L., Cassandra, A.R., Kaelbling, L.P., "Learning Policies for Partially Observable Environments: Scaling Up," in Proceedings of the 12th Internaltion Conference on Machine Learning. 1995.