Tutorial: Basic Planning and Learning

Tutorials > Basic Planning and Learning > Part 3

- Introduction

- Creating the class shell

- Initializing the data members

- Setting up a result visualizer

- Planning with BFS

- Planning with DFS

- Planning with A*

- Planning with Value Iteration

- Learning with Q-Learning

- Learning with Sarsa(λ)

- Live Visualization

- Value Function and Policy Visualization

- Experimenter Tools and Performance Plotting

- Conclusion

Planning with BFS

One of the most basic deterministic planning algorithms is breadth-first search, which we will demonstrate first. Since we will be testing a number of different planning and learning algorithms in this tutorial, we will define a separate method for using each planning or learning algorithm and then you can simply select which one you want to try in the main method of the class. We will also pass each of these methods the output path to record its results. Lets start by defining the BFS method.

public void BFSExample(String outputPath){

DeterministicPlanner planner = new BFS(domain, goalCondition, hashingFactory);

Policy p = planner.planFromState(initialState);

PolicyUtils.rollout(p, initialState, domain.getModel()).write(outputPath + "bfs");

}

The first part of the method creates an instance of the BFS planning algorithm, which itself is a subclass of the DeterministicPlanner class. To instantiate BFS, it only requires a reference to the domain, the goal condition for which it should search, and the HashableStateFactory. Because BFS implements the Planner interface, planning can be initiated by calling the planFromState method and passing it the initial state form which it should plan. The planFromState method automatically returns a Policy object. Using the PolicyUtils class, we rollout the policy from an initial state. This method requires the model of the envionrment (or alternatively, you can have it rolled out within an actual environment). The result of the rollout method is an Episode object, which is a record of the state, action, and reward sequence from rolling out the policy. Episode objects can be written to disk, using the write method, which we call here.

Using non-default policies

Each Planner implementation will return a different kind of Policy from planFromState that is relevant for the results the Planner stores. However, often times, after calling the planFromState method, you can wrap a different policy than the one that is returned around your planner instance to get slightly different behavior. For example, BFS's planFromState will return an SDPlannerPolicy instance, which will return the action the planner selected for any states on its solution path; if the policy is queried for a state not on the solution path, it will throw a runtime exception. However, you might choose to wrap a DDPlannerPolicy around BFS instead of using the returned SDPlannerPolicy. DDPlannerPolicy will act the same as SDPlannerPolicy except rather than throw a runtime exception if an action selection is queried for a state not on the current solution path, it will transparently recall planFromState on the new state to get an action to return; that is, it will perform replanning.

And that's all you need to code to plan with BFS on a defined domain and task!

Using the EpisodeSequenceVisualizer GUI

Now that the method to perform planning with BFS is defined, add a method call to it in your main method.

public static void main(String[] args) {

BasicBehavior example = new BasicBehavior();

String outputPath = "output/"; //directory to record results

//run example

example.BFSExample(outputPath);

//run the visualizer

example.visualize(outputPath);

}

Note that our output path ended with a '/'. Whatever path you use, you should include the trailing '/' since the code we wrote to write the file will automatically append to that path name.

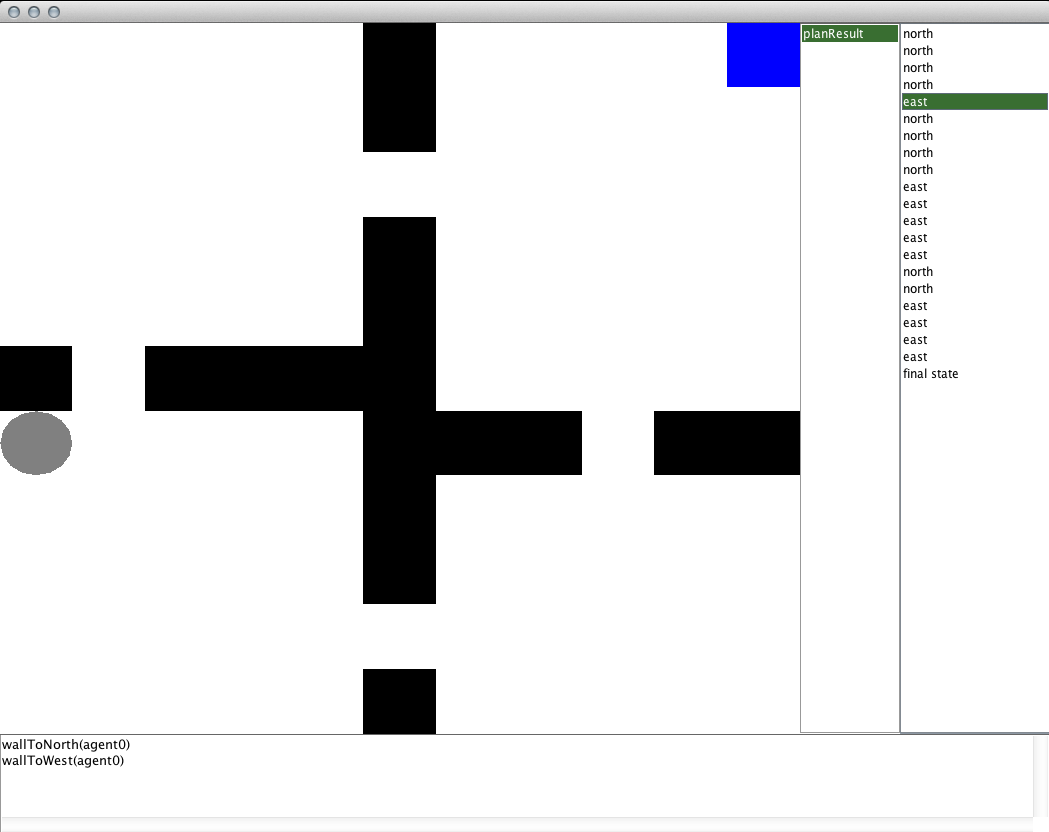

With the planning method hooked up, run the code! Because the task is simple, BFS should find a solution very quickly and print to the standard output the number of nodes it expanded in its search. Following that, the GUI should be launched for viewing the EpisodeAnalysis object that was recorded to a file. You should see something like the below image appear.

The main panel in the center of the window is used to render the current state selected. The text box at the bottom of the window will list all of the propositional functions that are true in that state, if the State is an OOState and the domain includes PropositionalFunction definitions. The leftmost list on the right side of the window lists all of the episode files that were recorded in the directory passed to the EpisodeSequenceVisualizer constructor. After selecting one of those instances, the list of actions taken in the episode are listed on the right-most list. Note that the action corresponds to the action that will be taken in the state that is currently visualized, so the result of the action will be seen in the next state. In the GridWorldDomain visualizer, the black cells represent walls, the grey circle represents the agent, and the blue cell represents the location object that we made.

Planning with DFS

Another common search-based planning algorithm is depth-first search (DFS). Define the below method to provide a means to solve the task with DFS.

public void DFSExample(String outputPath){

DeterministicPlanner planner = new DFS(domain, goalCondition, hashingFactory);

Policy p = planner.planFromState(initialState);

PolicyUtils.rollout(p, initialState, domain.getModel()).write(outputPath + "dfs");

}

You will notice that the code for DFS is effectively identical to the previous BFS code that we wrote, only this time the DeterministicPlanner is instantiated with a DFS object instead of a BFS object. DFS has a number of other constructors that allow you to specify other parameters such a depth limit, or maintaining a closed list. Feel free to experiment with them.

After you have defined the method, you can call it from the main method like we did the BFS method and visualize the results in the same way. Since DFS is not an optimal planner, it is likely that the solution it gives you will be much worse than the one BFS gave you!

Planning with A*

One of the most well known optimal search-based planning algorithms is A*. A* is an informed planner because it takes as input an admissible heuristic that estimates the cost to the goal from any given state. We can also use A* to plan a solution for our task as long as we also take the additional step of defining a heuristic to use (or you can use a NullHeuristic which will make A* uninformed). The below code defines a method for using A* with a Manhattan distance to goal heuristic.

public void AStarExample(String outputPath){

Heuristic mdistHeuristic = new Heuristic() {

public double h(State s) {

GridAgent a = ((GridWorldState)s).agent;

double mdist = Math.abs(a.x-10) + Math.abs(a.y-10);

return -mdist;

}

};

DeterministicPlanner planner = new AStar(domain, goalCondition, hashingFactory,

mdistHeuristic);

Policy p = planner.planFromState(initialState);

PolicyUtils.rollout(p, initialState, domain.getModel()).write(outputPath + "astar");

}

To implement our heursitic, we made an annonmous class that implements Heuristic, which requires implementing the h method. We typecast the state to a GridWorldState, pull out the agent, and compute the Manhatten distance to 10, 10, our goal location. The h method then returns the negative value of the distance. We return the negative value, because BURLAP is based on rewards rather than costs. However, negative rewards are equivalent to costs, so the heursitic we give must be a non-positive value (<= 0). Note that A* also only operates on costs (negative rewards), so whenever using A*, you should make sure that the reward function for your domain returns negative values. By default, GridWorldDomain uses a UniformCostRF, which causes all rewards to be -1.

Beyond defining a heuristic for A*, instantiating it and using it works mostly the same!